I was briefly investigating if we could replace a centalized computer and meters of electrode wire with a number of smaller computers connected via wireless communication.

In a development phase, it would also be handy if we could collect the raw data from the Inertial Measurement Unit (IMU) and build movement model on a computer with more memory (since the total memory capacity of this micro controller only 1 MB, and it has no storage device, where we can store this kind of data).

Wireless IMU Transfers

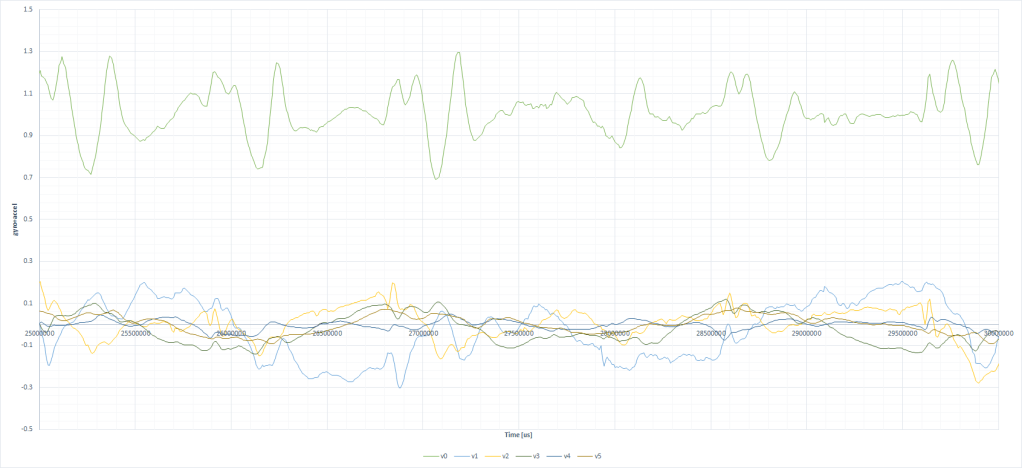

I have been testing the tiny Arduino BLE 33 Sense, and in particular I have been investigating the ability to wirelessly transfer sensory data in real-time via Bluetooth Low Energy (BLE).

It was a bit complicated to figure out how to transfer the required amount of data at the required rate. I was trying to read the real-time data (119 Hz) using a small Python program on my laptop, but it looked like there was some sort of overhead involved in sending the updates, which went away when I changed the protocol to send 7 frames at a time (17 Hz, 224 bytes each).

Also, the reach of the Bluetooth LE signal was not that far. When walking around the living room, the signal was lost at times, as the distance between the laptop and the Arduino exceeded 5 meters or so.

Before using Bluetooth LE in the future, we would need to investigate the cause of the low frame rate and the frequency of dropouts when sender and receiver are close.

Distributed Data Collection

If we are to consider collecting and combining sensory data from different parts of the body, we should investigate the latency characteristics of BLE transfers, and we should consider if we would somehow be able to compensate for data having varying delays when performing movement model inference.

We might be able to include movement data from remote locations received with delay since we in the model already have a temporal view with so-called speed vectors. Even though we observe data with a delay, we can still incorporate it into the model by adjusting according to speed and time in relation to the observation delay.

When updating the model with observations, use an exponential filter, so that older observations are slowly forgotten. Whenever we observe something recent, we calculate a weighted average between all existing information and the newly added, so that the new information is considered more if existing data is old.

We can probably use the same approach when we receive old data, so that the data is added to the model with a lower weight, because it is not recent. Exact formulas are of cause required for figuring out how to combine this information correctly. I would expect the simplest way to handle this would be to have speed vectors for each remove location. This way, we can cope with variations in delay, refresh rates and dropouts independently for each source. However, this increases the amount of RAM required for tracking.