TRANSLATION ACCURACY

Translating a sequence of vectors from an accelerometer into movements is not a simple task, when it must be done accurately and with limited computational resources.

Accuracy here also relates to how the underlying model reacts to previously unseen movements. Since this device is attached to a person in movement, it must perform muscle activations only when it is certain about its conclusions. If the user suddenly decides to start sprinting, and the machine has never seen that before, it would be critical if the machine caused the user to fall by activating leg muscles at incorrect times.

The Model

For the purpose of enabling reasoning about model accuracy, I beleive we should be using a generative model for this problem, since one of the main features of generative models are their ability to tell if observations are similar to what was used during training.

I came up with a simple model, which for a number of movement progress points contains mean and standard deviation values for each of the dimensions in the movement vectors.

Given the simple probabilistic model, the main challenge then relates to performing computations on this model on the temporal dimension while still using a minimum of computational resources. This was achieved by assuming movement speed is constant and examining a fixed number of speeds. By using exponential filtering, the amount of calculations required for each observation is limited.

Evaluation

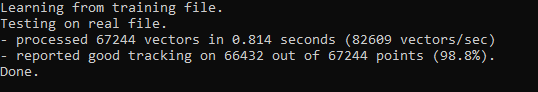

I have now added the first version of the code for building movement translation models. Some enhancement and configuration options seem required, and code documentation is still pending. The code already existed in the prototype but needed some cleanup in order to be usable in a release component.

Currently, only a Symmetric Movement Translator has been added, in which we teach the machine how to activate muscles in one side of the body, and the machine applies the same activations to the other side with a half movement delay.

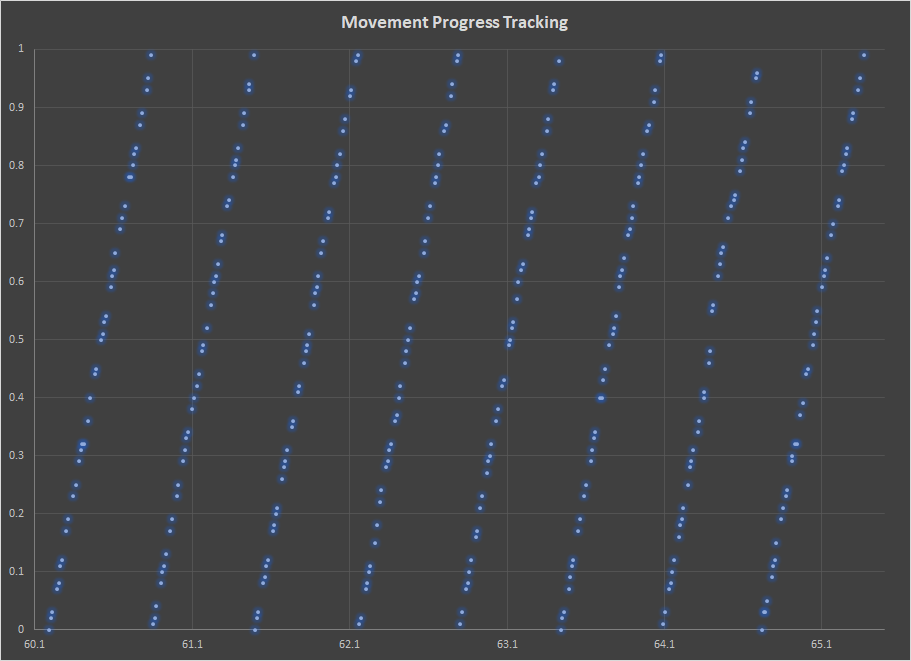

I did an extraction of translator output using data from a 5 km run I did with the prototype machine, and it looks like the translator does a pretty good job at tracking the movement progress.

Even for a relatively slow processor, we should have computational capability for handling a number of underlying models, as to enable both walking, running and sprinting in the same underlying model.